Background of the Project

SITO Mobile is a publicly traded advertising technology (AdTech) company that uses consumer and location data to help brands run targeted media campaigns on mobile devices.

Their software is known as a demand-side platform (DSP), which allows media buyers (e.g. brands, advertisers, and agencies) to purchase ad space from publishers (e.g. websites and mobile apps).

In 2013, SITO Mobile engaged Clearcode to solve several technical challenges they were facing. This partnership between the two companies is still ongoing, with over a dozen full-time engineers working on the project.

As we’ve been providing AWS solutions to SITO Mobile since the beginning of our cooperation, we’ve broken the following section up into two parts — the first part describes the AWS solutions we provided between 2013 and 2018, and the second part describes more recent solutions from 2018 to 2019.

AWS solutions: July 2013-2018

The main challenges we faced:

- After setting up the infrastructure, we found that we had to maintain multiple instances that used many different third-party components and services. This meant we had to perform a lot of manual tasks, such as managing multiple MySQL databases and performing regular backups.

- Another key infrastructure challenge we faced was finding a solution that would allow us to replace faulty instances and update the application’s code with predictable results.

- One of the key features of any demand-side platform is frequency capping, which involves limiting the number of times a particular online visitor is exposed to the same ad in a given timeframe (e.g. max 3 times per 24 hours). We needed to find a solution that would deliver low latency access to user-level data.

- Generating reports based on tracked events was also another key challenge as we had to ensure the reports could be processed and made available in an efficient and timely manner.

The solutions

- To improve the performance of the DSP and eliminate redundant and manual processes, we opted for multiple Amazon Web Services, including Amazon Relational Database Service (Amazon RDS).

- We chose Auto Scaling as this allows us to replace unreliable Amazon Elastic Compute Cloud (EC2) instances with new ones based on our pre-configured Amazon Machine Images (AMI).

- To run key functionalities of the bidder (the component of the DSP that buys ad space) and for carrying out frequency capping, we chose DynamoDB because of its ability to provide low latency.

- For tracking and reporting on events, we use Elastic Load Balancing (ELB) and utilize Lambda for processing logs.

- We also selected Redis for querying analytics data (impressions, clicks, various derived metrics drillable by several dimensions, etc.) and for correcting available budgets for bidding when we experience issues with the tracker.

As the projects relate directly to AWS optimization and service selection, the following dates refer to when we implemented the Amazon Web Services listed above.

- Amazon Relational Database Service: January 2016 — 2019.

- Auto Scaling and Amazon Machine Images: January 2017 — 2019.

- DynamoDB: January 2016 — 2019.

- Elastic Load Balancing, Lambda, and Redshift: April 2016 — 2019.

The outcomes and results

- Thanks to RDS and other Amazon Web Services, we can spend much less time managing the components (e.g. databases, instances, etc.), and dedicate more time to working on the DSP’s features.

- The main benefit of auto scaling is that we can launch new instances quickly if and when they become unreachable or faulty, which allows us to maintain high availability of the DSP.

- DynamoDB allows us to deliver granular frequency capping with up-to-the-minute precision by querying user-level data at low latency and enables the bidders to maintain high throughput.

- Using ELB, Lambda, and Redshift enables us to make final reports available within a very short time frame – no longer than 15 minutes.

Thanks to AWS and its integrated tools and components, the DSP is able to receive up to 260,000 bid requests per second — aka queries per second (QPS).

AWS solutions: 2018 to 2019

The main challenges we faced

1. Set up West Coast data center

In early 2019, the need to set up instances of SITO Mobile’s DSP in the West Coast arose. The reason for this relates to how online media is transacted.

Whenever a user accesses a mobile app, an ad request is sent from the app to a supply-side platform or ad exchange, which then passes the ad request (aka bid request) to multiple demand-side platforms, including SITO Mobile’s DSP.

From there, SITO Mobile’s DSP returns their bid to the SSP and ad exchange. If their bid is successful (i.e. the highest), then it’s selected, and the ad is displayed to the user.

To ensure the mobile app delivers a good user experience, the ads need to be displayed to the user as quickly as possible. Meaning, the main technical challenge of this process relates to the speed in which SITO Mobile’s DSP can return their bids to the SSPs and ad exchanges.

If their DSP doesn’t respond to the ad request within a certain time frame (typically between 120ms — 300ms), then they’ll be timed out and won’t be able to purchase the ad space. This equals lost opportunities, and often, sales and conversions, for SITO Mobile’s clients.

To ensure SITO Mobile’s DSP is able to respond to ad requests within the set time frame, they need to have instances in the same data centers as the SSPs and ad exchanges.

In addition, the ad requests from mobile apps are sometimes sent to the nearest data center. This means, if SITO Mobile wanted to access more supply and display ads to users in the West Coast, they would need to set up instances there.

The technical challenges we faced with this project revolved around changing the architecture to support the application in the new data center.

2. Build and implement budget control (aka banker)

SITO Mobile’s DSP receives 100,000+ ad requests per second from SSPs and ad exchanges. However, they are not informed about the outcome of the auction (i.e. whether they won the bid) until a later time.

This creates a problem for advertisers as they aren’t sure how much of their budget had been spent because of the delay in receiving data from SSPs and ad exchanges. This ultimately leads to overspending.

We initially used Redis to control overspending by recording budget spend, which was queried in the bidder. To further reduce overspending, we implemented a budget control system, also known as a banker.

The solutions

- We set up the bidders and Lambda (for monitoring) in the West Coast data center and copied the Amazon Machine Images (AMI) setup from the East Coast region to deploy the same application in both data centers.

- We used Elasticache Redis for storing budgets, reservations, and to perform fast queries from the banker to get reservations for specific bidders. We also utilized VPC peering transferring data from the West Coast data center.

How AWS were used as part of the solutions

- Amazon Relational Database Service (Amazon RDS)

- Auto Scaling

- Amazon Machine Images (AMI)

- Amazon Elastic Compute Cloud (EC2)

- Elasticache Redis

- Elastic Load Balancing (ELB)

- Lambda

- Redshift

The outcomes and results

- SITO Mobile clients can purchase ad space from publishers via ad requests originating from the West Coast of the USA — US West (North California) Region.

- SITO Mobile client brands, advertisers, and agencies can reduce budget overspending across all campaigns.

Why Amazon Web Services?

- We can lower maintenance costs as we no longer need to dedicate resources to maintain the databases’ infrastructure.

- AWS allows us to host the bidder in the same region of the world as the SSPs/ad exchanges, which shortens the path of communication between the DSP and the SSPs/ad exchanges.

- Thanks to stability and performance offered by AWS, the DSP can receive 260,000 queries per second (QPS).

Case Study

Marketing Automation

Advertisers use SITO Mobile’s advertising technology platform to create, manage, and deliver marketing campaigns.

Below is an overview of the main features and technical processes of the SITO Mobile platform and how they relate to marketing automation:

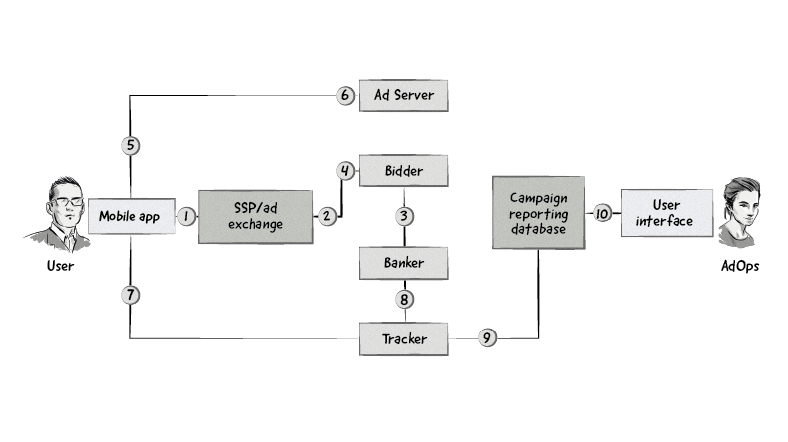

Description of each step:

1. When a user accesses a mobile app containing an ad slot, an ad request containing user data (e.g. device type and location) is sent from the mobile app to a supply-side platform (SSP) and/or ad exchange.

2. The ad request is then passed on to the SITO Mobile’s bidder.

3. The bidder requests budget resources from the banker. The banker sends back budget resources to the bidder and adjusts the campaign’s budget accordingly to avoid overspending.

4. The bidder matches the bid request against campaigns created by advertisers. If there is a campaign match, the bidder sends back a bid to the SSP/ad exchange.

When we refer to “match”, we are talking about whether the information in the ad request matches the targeting criteria defined in the advertiser’s campaign. For example, if the user is located in New York and is using an iPhone, then the bidder would search for campaigns that want to target users in New York who have an iPhone.

Assuming the bid is successful (i.e. it is the highest bid), the bid response from the bidder (ad markup) is sent back to the SSP/ad exchange and then onto the mobile app.

5. The ad markup from the bidder loads in the mobile app, which sends a request to SITO Mobile’s ad server to retrieve the ad.

6. The ad server sends back the ad and displays it in the mobile app.

7. SITO Mobile’s tracker collects information about the user and campaign, such as whether an ad was displayed to the user, if the user clicked on the ad, if the user converted (e.g. downloaded the app the ad was promoting) along with other analytics and reporting data.

8. Budget data is passed to the campaign reporting database via the tracker.

9. Campaign and analytics data is passed to the campaign reporting database via the tracker.

10. The campaign reporting database aggregates the data and passes it on to the user interface. The user interface allows advertisers to plan ad campaigns, adjust their budget, and view analytics data about the performance of their campaign (e.g. impressions, clicks, conversions, etc.).

It is important to note that the above processes don’t happen in a linear fashion — many of them would happen simultaneously.

Security

We handle secure and reliable storage of customer, marketing campaign, and tracking data in the following ways:

- We store raw logs on S3 so that if a database crashes, we can restore it from a snapshot and re-ingest the logs.

- For the in-memory databases (i.e. Elasticache for Redis), we enable a specific number of automatic snapshots for AWS to create.

- For security, we have set up network access control and HTTPs for connections between the client and Elastic Load Balancing (ELB).

Campaign management functionality

Below are the main campaign management functionalities:

- User interface: allows advertisers to plan, create, manage and schedule ad campaigns.

- Tracker: collects data from the bidder and banker and passes it on to the campaign reporting database.

- Campaign reporting database: aggregates the data from the tracker and displays it in the user interface, providing up-to-date and accurate data so advertisers can properly manage their campaigns.

Marketing Campaign Delivery and Measurement

Below are the main marketing campaign delivery and measurement components:

- Bidder: bids on ad requests sent from SSPs and ad exchanges via mobile devices.

- Ad server: delivers marketing campaigns to mobile users.

- Tracker: collects data for measuring marketing campaigns.

- Banker: tracks the advertising campaign’s budget to ensure the advertiser doesn’t overspend.

- Campaign reporting database: aggregates the data from the tracker and displays it in the user interface to provide up-to-date and accurate reports about the campaign’s performance and budget.

- User interface: allows advertisers to run and measure their campaigns in one place.

Marketing Campaign Tracking and Analytics

Below are the main marketing campaign tracking and analytics components:

- Tracker: collects data from the bidder and banker and passes it on to the campaign reporting database.

- Campaign reporting database: aggregates the data from the tracker and displays it in the user interface, providing up-to-date and accurate reports about the campaign’s performance and budget.

- User interface: allows advertisers to view analytics, campaign, and budget reports in one place.

Trigger and Rule-Based Automation

- Bidder: matches incoming ad requests from SSPs and ad exchanges with an advertiser’s campaigns based on predefined rules (i.e. targeting criteria). If the targeting criteria set in the campaign matches the data in the ad request, then the bidder will trigger a bid response.

- Ad server: the bidder tells the ad server which ad to serve based on the data passed in the ad request.

Performance Efficiency

Compute

Below we describe how we selected the right AWS compute options.

Instances:

- We chose instance types based on our own benchmarks. Benchmarks were derived by running A/B tests of performance-sensitive production instances and using memory requirements of the project.

- We compared various EC2 instance types and initially chose M4 due to the balance between the various resources. As the traffic to the DSP increased, we switched to C4 because of its results during performance tests and the heavy workload it can handle. We have since upgraded to C5 as the next generation of the compute-optimized instance type.

Containers:

- When choosing the containers, we evaluated the three AWS container orchestration services — Elastic Container Service (ECS), Elastic Container Service Fargate (ECS Fargate), and Elastic Container Service for Kubernetes (EKS).

- We decided to go with ECS as it provides us with more disk space than what’s typically offered by ECS Fargate.

Functions:

- We selected Lambda for tasks that need to run in parallel with each other in separate small batches, which sometimes are invoked by other AWS services.

- We enabled log processing on our ELB service to run the tracker, which is responsible for real-time actions, such as redirecting users to landing pages, setting conversion cookies, and informing the banker about impressions and money spent.

Storage

When deciding on the storage services, our main considerations focused on the existing architecture setup, as well as availability and reliability.

We implemented and used various storage solutions in the past, such as S3 Glacier and Amazon Elastic File System (EFS), but ultimately chose Amazon Elastic Block Store (EBS) as it met our requirements.

Database

Our main consideration when selecting the database solutions was the existing architecture. During the selection process, we considered the following services:

- RDS for PostgreSQL

- ElastiCache for Redis

- Redshift

- Databases on EC2

After evaluating the above database solutions, we went with the following setup:

- Redshift for storing terabytes of event data

- Redis for access to data in real-time by the bidder

- PostgreSQL for all other storage requirements

Network

We used:

- Classic ELBs to route traffic to the various EC2 instances

- ELB logs for gathering traffic data

- Cloudfront to serve creative assets

- Route53 to manage both public and private domains and subdomains

- VPC to separate environments

- Multiple VPCs with VPC peering

We started migrating from Classic Load Balancers to Application Load Balancers as we can use applications in containers and route a single load balancer to different instances for different domains.

Resource Review

We review the architectural decisions and approaches when introducing new services or making changes to existing ones.

The main key performance metrics we track and observe relate to the business and technical requirements of the DSP itself.

For example, we track the performance of our architecture to ensure the DSP can:

- Respond to bid requests within the defined time frame (e.g. 150ms)

- Generate reports quickly (under 15 minutes)

- Send campaign budget information to the banker so SITO Mobile clients do not overspend

Performance Monitoring

We use CloudWatch with custom metrics from our applications, including alarms and dashboards.

Tradeoffs

One of the main tradeoffs of the application’s setup is swapping automation for manual management of certain services. Even though manually managing these services means we spend more time configuring compared to automated services, we can configure and optimize more parts of the services, allowing us to achieve overall greater performance.