When it comes to developing software, there are a vast number of tools and solutions that can help you better deal with application deployments. Over time, these solutions change depending on our client’s needs and our knowledge of a specific tool.

There’s also the desire to try something new, especially when it looks promising. On top of that, every developer has their own preferred operating system, favorite tools, and even habits.

To make everyone happy, we use basic stuff like bash scripts and Ansible playbooks. There’s nothing overall fancy here, and we mainly apply methods that aren’t tied to any specific platform or solution — we’re not reinventing the wheel.

In this post, we outline the tools and services we use in our development pipeline, explain why we use them, and offer some helpful tips along the way.

A Brief Overview of Our Development Approach

As Clearcode is a full-service software development company, most of our teams have a dedicated DevOps engineer. However, developers are allowed, and even strongly encouraged, to do some DevOps-related tasks on their own.

Moreover, with the increasing number of team members coming on board, handling deployment tasks is an unquestionable benefit.

As a team, we want to write, test, and deploy applications on every existing platform without changing the whole deployment process.

Also, projects should be run, tested, and built on every developer’s own equipment. This can be helpful when working remotely from locations without Internet access or when all current build agent slots are busy and builds are queued.

To help developers gain new skills and expand existing ones, knowledge sharing within and outside of the company is a crucial part of our development pipeline, which typically involves weekly tech meetings where we present and discuss new solutions.

We are not afraid to change anything in our pipeline and we always try to experiment with new technologies. If it works for us, we will stick with it. We don’t adopt these solutions to old projects, but we apply them to every new project.

The Tools and Services We Use in Our Development Pipeline

As a company, we work with clients from all over the world. Most of them have their own infrastructures, whether that be Amazon Web Services, Google Cloud Platform, Microsoft Azure or even bare-metal instances.

This means we have to carefully choose the tools and services we use to ensure they are compatible with the various cloud-computing services.

Below are the main tools and services we use in our development pipeline.

Docker

We simply love Docker.

We use Docker extensively, from local development to production deployments. Setting up a working environment in Docker is far easier than creating OS-specific approaches. If you can run Docker containers, that should be enough.

Last year we used Docker containers for a large majority of our projects. We were able to schedule the newly written apps to run on various Docker platforms.

This means that we have one deployment routine, regardless of the application. Of course, there are many different languages and framework-specific approaches to Docker apps, but essentially it’s just a container image pushed to some repository that needs to be run on some Docker-compatible platform.

Running Docker containers on AWS EC2 Container Service is a good start.

ECS tasks helped us a lot. Creating task definitions via AWS console or API is a trivial thing to do. Still, it demands some basic understanding, like how to launch an application, assign all permissions, mount volumes, and so on.

Dealing with tools like ecs-cli to create an AWS version of docker-compose files calls for another layer of complexity. This requires some time for reading documentation, getting familiar with tools, and finally using them on a daily basis.

Unfortunately, these tasks are not usable when dealing with certain cloud-computing services, such as Google Cloud platform.

Continuous Delivery

At Clearcode, we want to deliver final products as fast as we can.

To make this possible, we need tools that will help us perform some recurring tasks in an automated way.

This is where Continuous Integration/Continuous Delivery solutions come in.

Due to our love for Docker, the choice was obvious: Drone platform.

We have been using Drone for a few months now, and even though it’s still under development, we’re satisfied with the results. We take advantage of features like isolated build steps within one workspace, secrets, step conditions, notifications, publishing images on different platforms, tons of plugins, and many more.

Our new pipeline with Drone has reduced build and deployment time dramatically.

Also, having a .drone.yml file within the project’s code is our preferred way.

Additionally, having as many Drone agents as we want has eliminated build ques caused by insufficient build slots.

Kubernetes

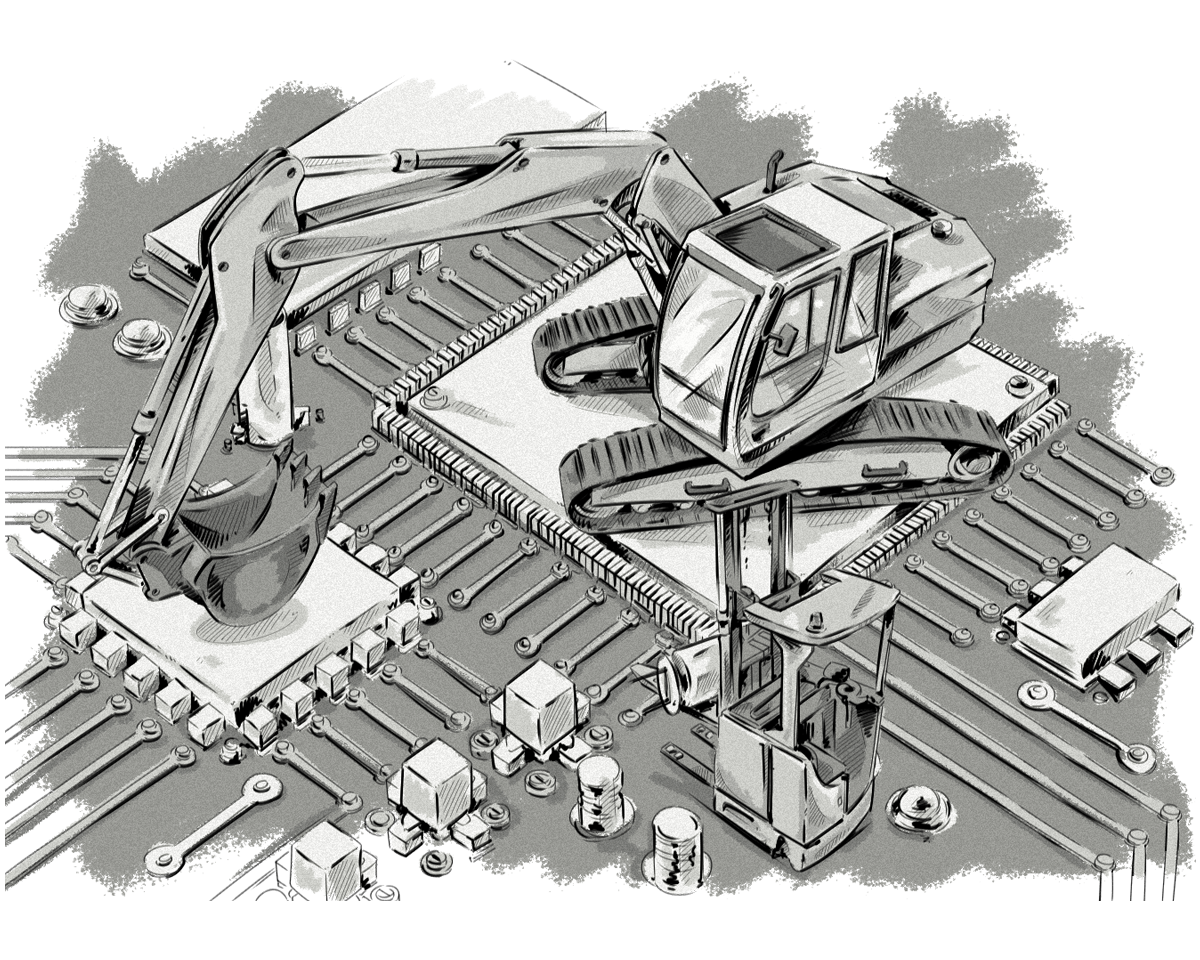

Having a platform-independent environment with a strong use of Docker gave us an idea to adopt Kubernetes as our default orchestration tool.

It seemed cumbersome and confusing at the beginning, but after a few days of working with Kubernetes, our developers got used to it — they even started to like it and began using it locally.

Below are some key elements of our orchestration process:

Zero downtime deployments

This may sound funny, but there were actually times when deploying applications without any interruptions was labelled as progress.

In 2018, the default development mode should be zero downtime, if it isn’t already. This means that every application we create must be deployed in this way.

Runs on local environment

Even the most complex application stacks must be run on a developer’s local machine without any code changes. Sometimes it involves running over 20 microservices communicating with each other.

Independent containers

Containers must be independent from each other with their own health checks. Kubernetes’ liveness and readiness health-checks are very handy to distinguish a recently started container from a working service.

Easy upgrades of applications

Kubernetes’ Rolling Update feature is a perfect tool for upgrading containers.

It makes no difference whether it’s just one container or many of them as it allows you to control the whole update process.

All you need to do is identify how many containers must be updated at once, how many seconds a healthy state takes to be recognized as a success, and so on.

Of course, there is a fast way to implement a rollback.

Sensitive data management

Almost every application has some sort of sensitive piece of data, for example, database credentials and tokens.

By contrast, every cloud provider has its own way of dealing with this data.

Unfortunately, these solutions are not interchangeable. For example, getting encrypted data from AWS S3 when starting the application doesn’t work on GCP (instance roles) and requires installing additional software (aws-cli).

Kubernetes’ secrets helps us to unify this approach, even on a local machine.

Service discovery

In a dynamic environment, when new containers start and old ones stop, there needs to be a way to inform other services that the old containers no longer exist.

That’s where Kubernetes’ services comes in.

Instead of “talking” to specific containers, which may have changed, applications communicate with the services, which allows them to expose one or many pods to other applications.

Scaling

Dynamic scaling is a must in today’s world.

We write our applications with this in mind from day one of the product’s life.

Sometimes, the product’s traffic reaches levels higher than the clients anticipated, especially at the beginning when it has just been released to initial users.

That’s why it’s vital for us to have this possibility from the beginning, instead of worrying about what to do when it’s too late. With Kubernetes scaling, every single service can be transformed to handle the new traffic volume.

Works on every developer’s system

We only use OSX and Linux machines, so it’s quite easy to handle Kubernetes on these two operating systems.

This is especially important as the new version of Docker for Mac comes with Kubernetes support. Imagine running Ingress load balancer on your local machine with just one command. Linux has its own minikube cluster.

Guidelines

Below we share a few guidelines which make our work with Kubernetes easy and allow us to avoid problems at every stage of the project’s life cycle.

We hope you find them useful.

- Build Docker images as small as possible.

- Use alpine-based images.

- Make use of Docker’s multistage builds.

- Minimize the number of layers.

- Tag your images with an application version, git repo hash or even build number. DO NOT use “latest” tag.

- Make use of resource limits.

- Use Kubernetes labels. They are free.

- Use the good old Makefile to automate regularly performed tasks.

- Avoid installing unnecessary packages.

- Use init containers.

- Use liveness and readiness health checks.

- Use namespaces.

- Use

.dockerignorefiles. - Use secrets, mount them in a read-only mode. Make sure you distribute them securely.

- Avoid root user inside containers.

- Log to stderr and stdout.

- Use DaemonSets (fluentd).

- Use secure and authorized images.

- Limit access to Kubernetes cluster to authorised users only.

- Use Resource Based Access Control.

- Do not build sensitive data into containers. Use secrets instead.

- Add Kubernetes manifests to your code repository.

- Use a “deploy first” approach.

- Log application metrics and send them to an appropriate place. We use InfluxDB.

- Use Helm.

- Expose only required services.

- Use Ingress type for routing and path-based services

- Use /healthz health check path when possible