Contact us learn more about

the data and event tracking component

Data and Event Tracking Component

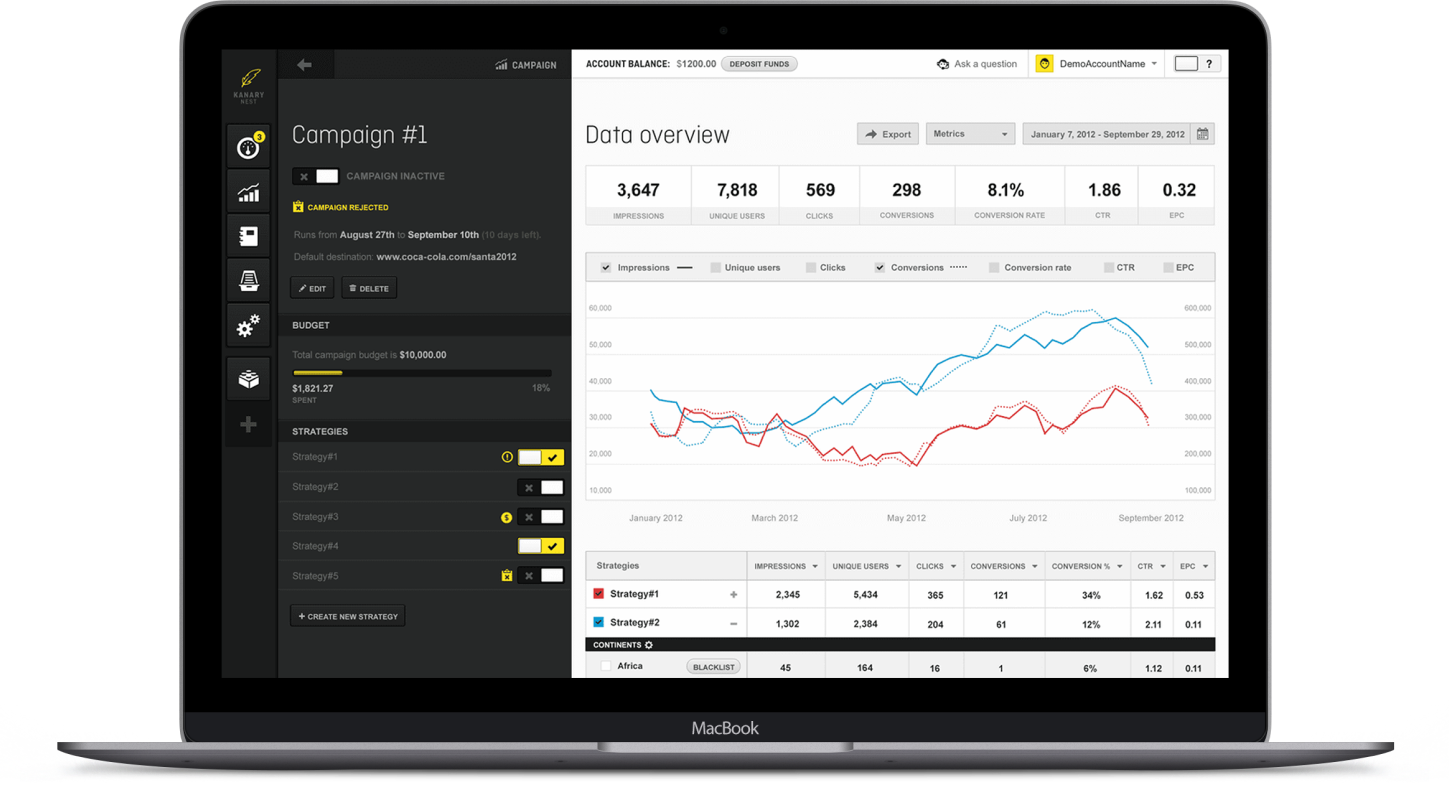

This is the story of how Clearcode scoped and built the MVP for one of our internal projects — tracker.

Every AdTech and MarTech platform is different.

Demand-side platforms (DSPs), for example, help advertisers purchase inventory from publishers on an impression by impression basis via real-time bidding (RTB).

Customer data platforms (CDPs), on the other hand, collect first-party data from a range of sources, create single customer views (SCVs), and push audiences to other systems and tools.

Although the functionality and goal of AdTech and MarTech platforms varies, they all have one thing in common; they all need a component that collects and delivers data from different sources (e.g. websites) to different systems (e.g. DSPs).

This component is known as a tracker.

CLIENT

Tech company

INDUSTRY

Programmatic advertising

SERVICE

AdTech

COUNTRY

Poland

About our Tracker project

One of our development teams completed an internal research and development project to build a tracker that can collect a range of events, such as:

- Impressions

- Clicks

- Video metrics (e.g. watch time and video completion rates)

Our project focused on building a tracker for a DSP, but it can be adapted to any AdTech or MarTech platform that needs to collect event data.

The main goal of the project was to build a tracker with core functionality, example extensions, example deployment scripts, documentation, and allow the tracker to be integrated into other components, such as analytics tools and reporting databases.

With our tracker project, we followed the same development process that we apply to all our AdTech and MarTech development projects for our clients.

Key points

Product

The tracker is used to collect event data (e.g. impressions, clicks, and video metrics) from different sources.

Solution

We designed and built our own tracker system that can be used for future client projects.

Project goal

It’s part of our AdTech Foundations — various components that can be used to help our clients save months of development time and tens of thousands of dollars when building AdTech and MarTech platforms.

Technologies

We chose the programming languages and technology stacks by running benchmark tests.

“Our tracker can be used to collect event data for AdTech & MarTech platforms.”

Krzysiek Trębicki

PROJECT MANAGER OF TRACKER

The Goal

The main goal of the project was to build a tracker with core functionality, example extensions, example deployment scripts, documentation, and allow the tracker to be integrated into other components, such as analytics tools and reporting databases.

Creating a story map

We started the project by creating a story map to help us:

- Define the key components and features of the tracker.

- Identify and solve the main technical challenges, such as performance, speed, and scalability.

- Decide what events the tracker should collect and how it will do it.

Defining the functional requirements

Based on the results from the story mapping sessions, we created a list of functional requirements for the tracker. The functional requirements relate to the features and processes of the tracker.

We identified that the tracker would need to:

- Receive and handle multiple types of requests (impressions, clicks, conversions, etc.).

- Process requests and generate events with proper dimensions and metrics.

- Allow request types and preprocessing of events to be configured.

- Extend its basic functionalities with plugins by exposing its API.

- Expose generated events to specified collectors (plugins) and allow them to be attached to specific event types by configuration.

- Include built-in log and queue collectors.

- Include a built-in plugin for our budget management component, banker.

- Support request redirects.

Defining the non-functional requirements

The non-functional requirements of the project aren’t connected with building a working product, but are connected to the performance, scalability, security, delivery, and interoperability of the tracker.

We identified the following non-functional requirements of tracker:

- High availability (99.999%).

- High requests per second (2000 requests/s).

- Low requests processing latency (15 ms on average).

- Security

- Privacy — e.g. ensuring we don’t expose personal data to third parties when we need to share it with other components.

- Availability — ensure the platform won’t be impacted by a distributed denial-of-service (DDoS) from bad requests.

- Deployable to AWS, GCP, and Azure.

- Ability to integrate with custom plugins.

- Ability to integrate with other DSP components such as the banker.

- Platform scalability — the tracker needs to be able to handle large increases in events.

- Idempotency — processing the same logs many times in case of errors or temporary unavailability

Selecting the architecture and tech stack

We selected the architecture and tech stack for the tracker project by:

- Researching benchmarking tools used for testing the performance of the programming languages.

- Comparing different variations of the technology stack.

The main metrics we wanted to test were:

- Latency of requests

- Requests per second

- Error ratio

- Percentiles latency

Technology Stack Comparison

We decided to test the following programming languages and technologies:

- Golang (aka Go) because it’s growing in popularity and we were familiar with it.

- Rust because other development teams have used it to build trackers in the past.

- Python because we are very familiar with it.

- OpenResty = Nginx + Lua because it allows us to create a tracker using just an nginx HTTP server.

We decided to run initial benchmark tests on all the technologies using the benchmarking tools listed above, but later focused on running more tests using wrk2, gatling and locust.

All three tools were configured to allow for maximum elasticity.

The results and the chosen programming language

The two best performing programming languages were Nginx + Lua and Golang, and while they had similar results across all benchmarking tools, we chose Golang due to current market needs and its popularity.

The MVP Scoping Phase took 1 sprint (2 weeks) to complete.

The MVP development phase

With the MVP Scoping Phase completed and our architecture and tech stack selected, we began building the MVP of the tracker.

We built the tracker in 5 sprints (10 weeks).

Below is an overview of what we produced in each sprint.

Sprint 1

What we achieved and built in this sprint:

- Implemented basic functionality.

- Configured event types.

- Created benchmarks as a continuous integration (CI) step.

- Produced visual benchmark results.

Sprint 2

What we achieved and built in this sprint:

- Created and tested request redirects.

- Performed tests on the tracker.

Sprint 3

What we achieved and built in this sprint:

- Built a plugin for the log storage component.

- Enhanced configuration that allows us to configure any tracking paths..

- Produced the tracker’s documentation.

Sprint 4

What we achieved and built in this sprint:

- Built a plugin for our banker (a budget management component).

- Configured event extraction.

- Ran end to end tests.

Sprint 5

What we achieved and built in this sprint:

- Produced auto-generated documentation.

- Created a quickstart guide.

- Built docker images and pushed them into the internal registry.

The technologies we used

TypeScript

JavaScript

Python

React

NodeJS

GO

Angular

The result

Our Tracker component is designed to:

- Speed up the development phase of your custom-built AdTech or MarTech platform.

- Implement it into your existing AdTech or MarTech platforms to collect data.